Blog

I tested ChatGPT-5 vs Grok 4 with 9 prompts — and there’s a clear winner

After comparing ChatGPT-5 vs Gemini and ChatGPT-5 vs Claude, I just had to know how OpenAI’s flagship model compared to the controversial Grok. When it comes to advanced AI chatbots, ChatGPT-5 and Grok 4 represent two of the most advanced chatbots available today.

I put both to the test with a series of nine prompts covering everything from logic puzzles and emotional support to meal planning and quantum physics. Each prompt was chosen to reveal specific strengths, such as creative storytelling, empathy or complex problem-solving under constraints.

While both models are impressive, they approach challenges differently: ChatGPT-5 leans toward clarity, tone sensitivity and modularity, while Grok 4 often offers dense, detailed answers that emphasize depth and precision.

So which is the best AI chatbot for you? Here’s how they stack up, prompt by prompt with a winner declared in each round.

1. Complex problem-solving

Prompt: “A farmer has 17 sheep, and all but 9 run away. How many sheep are left? Explain your reasoning step-by-step.”

ChatGPT-5 was precise in the response while avoiding filler phrases.

Grok 4 answered correctly with minor verbosity, which was unnecessary and ultimately held it back from winning.

Winner: GPT-5 wins for a cleaner, tighter and more efficient response. Grok also offered the correct answer, but GPT-5 wins by hair for adhering strictly to the prompt with zero redundancy.

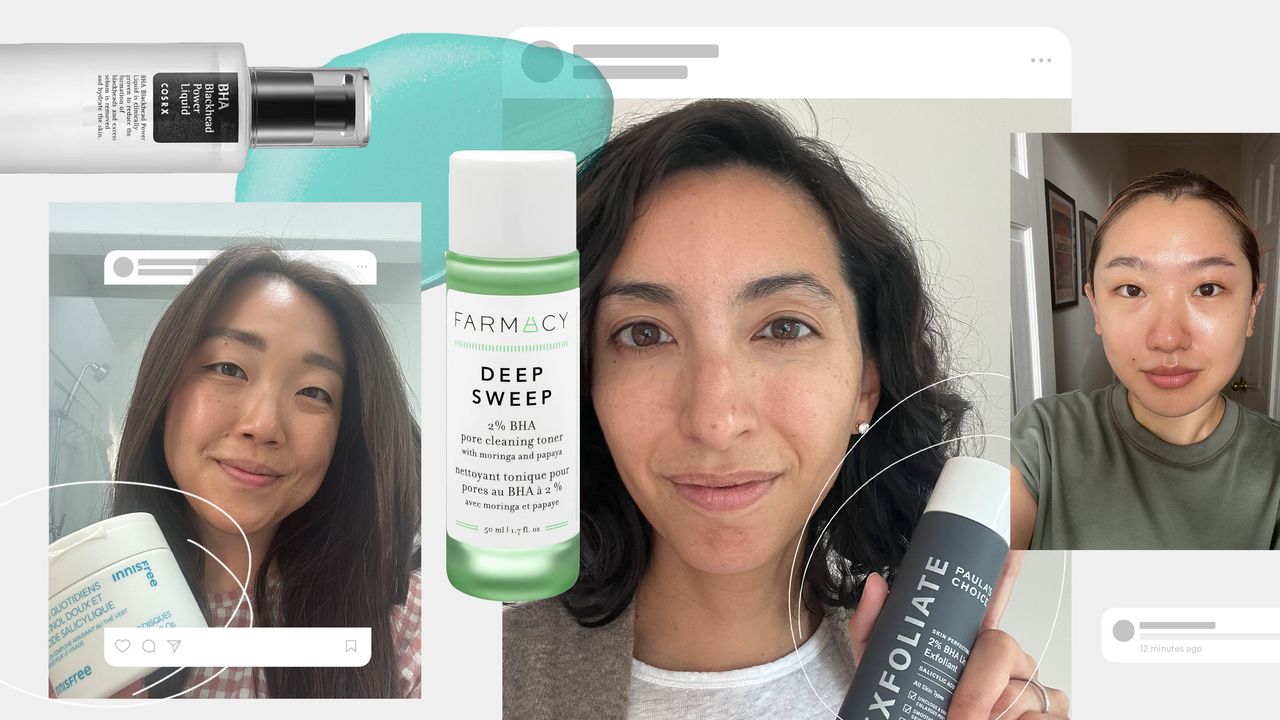

2. Creative storytelling

Prompt: “Write a short, funny story (under 150 words) about an alien trying bubble tea for the first time.”

ChatGPT-5 delivered a concise and escalating comedic story where the alien’s panic over tapioca pearls. The chatbot maximized humor with zero wasted words to hit the prompt target.

Grok 4 offered imaginative over-the-top storytelling but its humor is slightly diluted by an unnecessary crash-landing setup and a weaker ending compared to GPT-5.

Winner: GPT-5 wins for a tighter, funnier and more focused story. Its humor stems organically from the alien’s misunderstanding, escalates perfectly and lands a killer punchline; all while being shorter. Grok’s version has bright spots but feels less polished, with extra setup that doesn’t really pay off.

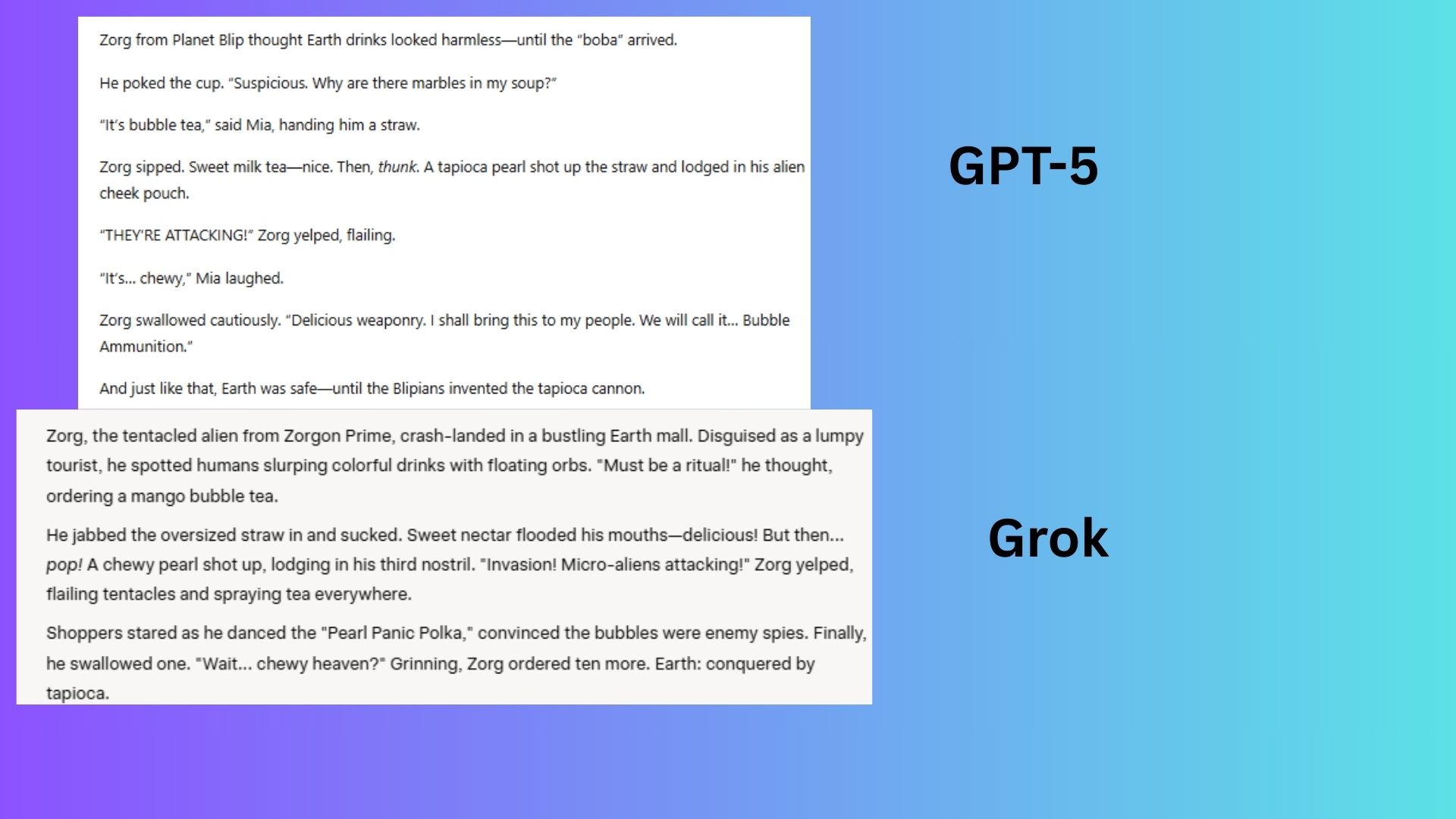

3. Real-world planning

Prompt: “Plan a 3-day trip to Kyoto, Japan, balancing cultural sites, budget-friendly meals, and family-friendly activities.”

ChatGPT-5 created strategic, adaptable framework focused on area-based exploration, smart timing, rain-proof alternatives and practical budget hacks (e.g., convenience store meals, transit pass advice), prioritizing efficiency and real-world flexibility over rigid scheduling.

Grok 4 delivered a highly structured, hyper-detailed itinerary with minute-by-minute scheduling, exact cost breakdowns per activity, and explicit family logistics, prioritizing turnkey execution and budget precision above flexibility.

Winner: ChatGPT-5 wins for an emphasis on budget-friendly, universally accessible, cheap eats and convenience over specific restaurants. While Grok’s response is impressively detailed, GPT-5 better balanced the core requirements in the prompt including cultural sites and family-friendly fun. Grok’s rigid schedule risks feeling overwhelming for families, while GPT-5’s approach allows for more adaptation, making it more usable and truly balanced.

4. Summarization

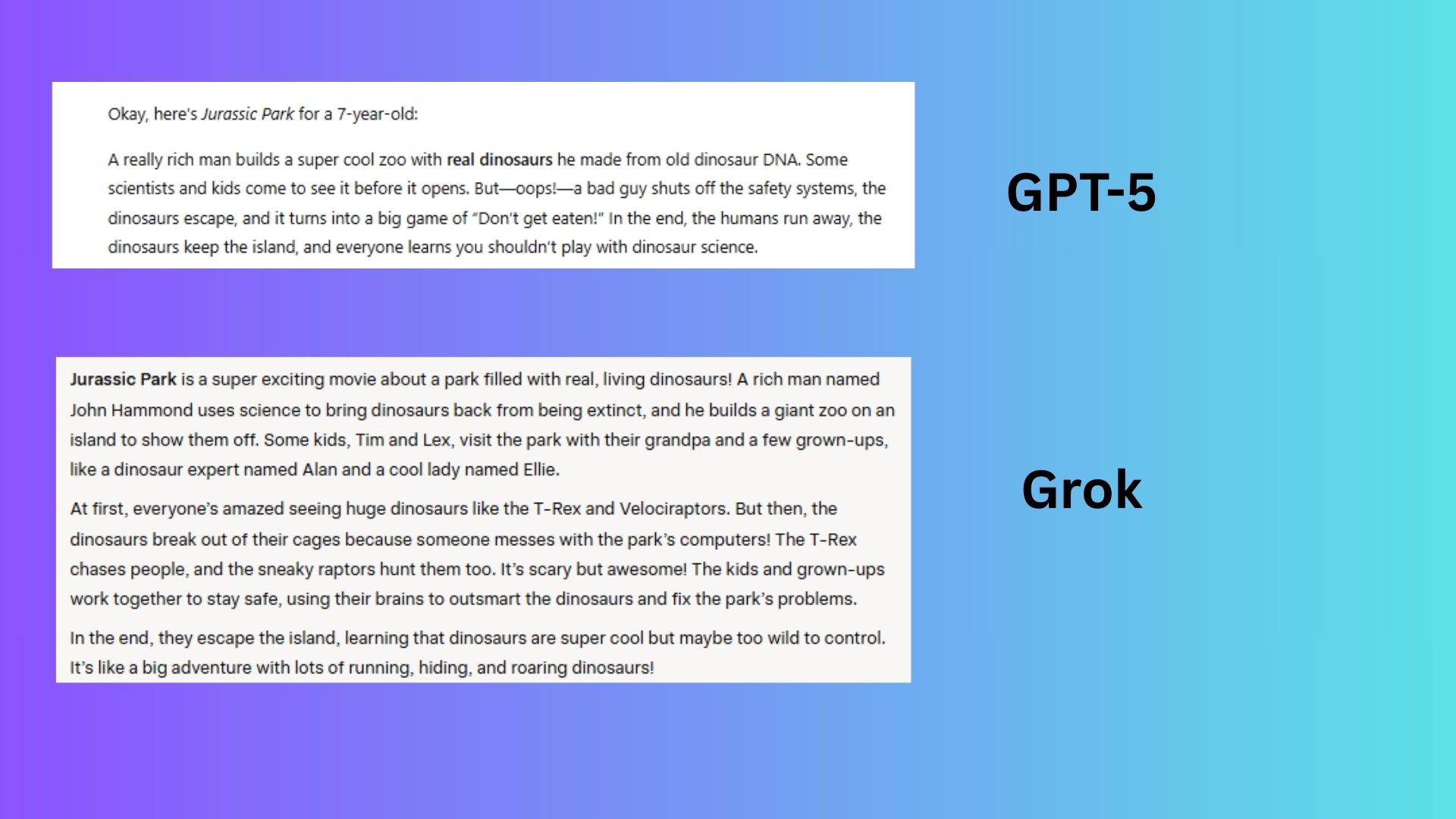

Prompt: “Summarize the movie Jurassic Park like you’re explaining to a 7-year-old”

GPT-5 delivered a concise and playful 60-word analogy (“big game of ‘Don’t get eaten!’”) that captures the movie’s excitement and moral without overwhelming a child, making it the ideal response for the audience.

Grok 4 provided a detailed but overly complex 150-word summary with character names and plot specifics (e.g., “someone messes with the park’s computers”), diluting the simplicity needed for a 7-year-old.

Winner: GPT-5 wins for understanding the audience and attention span, taking into account that less is more for young kids; Grok explains the plot like a Wikipedia summary.

5. Debate

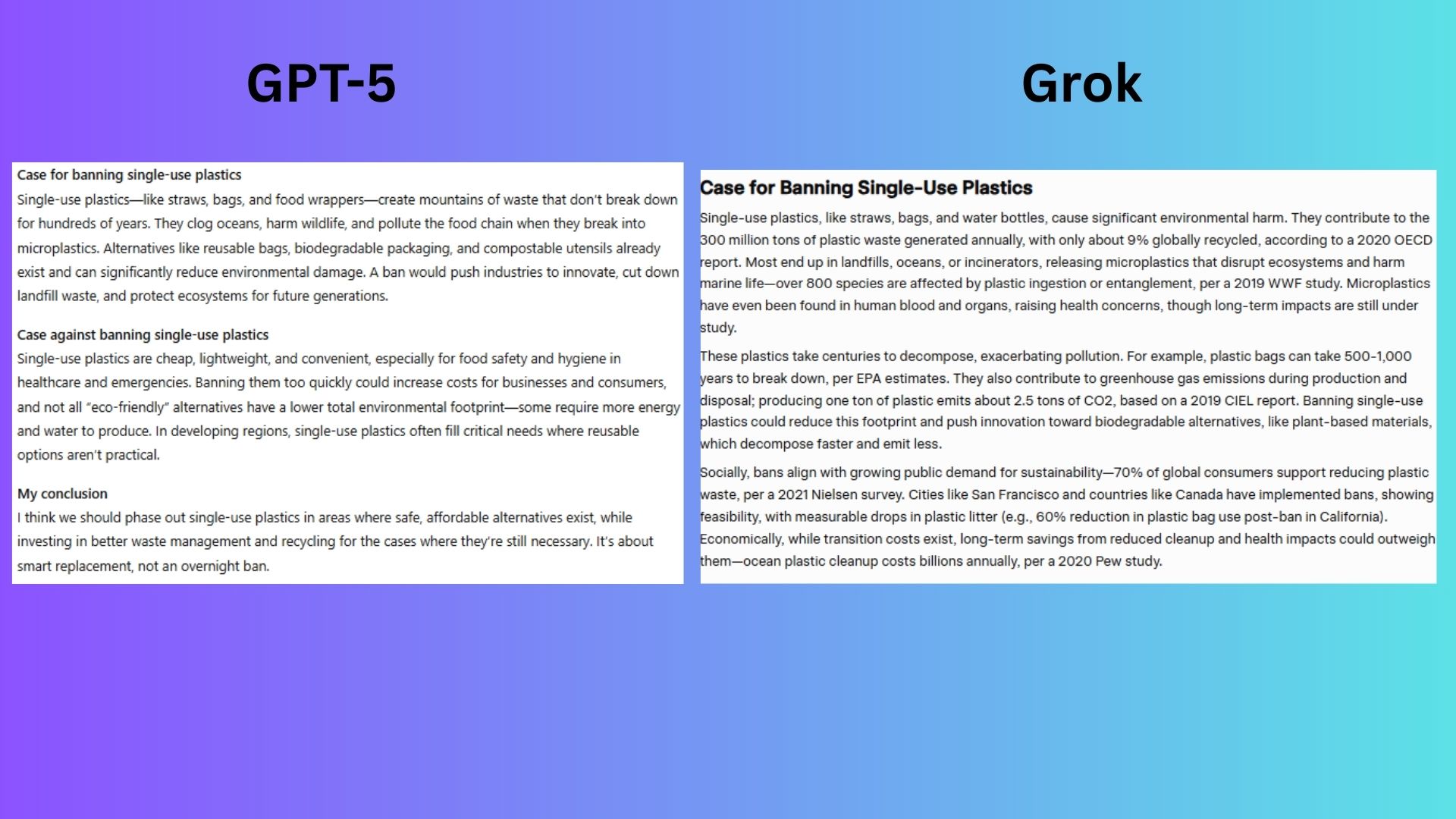

Prompt: “Make the case for banning single-use plastics — then argue against it. End with your personal conclusion.

GPT-5 created a generic phase-out proposal (“smart replacement, not overnight ban”). While simple and accessible, the response lacked evidence, specificity and original insight.

Grok 4 delivered a data-rich argument with a nuanced “phased approach” prioritizing high-impact items, paired with recycling innovation and behavioral incentives (e.g., deposit schemes). Although slightly verbose for casual readers, the depth and balance helped to understand the context of real-world policy.

Winner: Grok 4 wins for a balanced, evidence-driven analysis with concrete data (OECD, WWF, FAO studies), real-world policy examples (Canada, Australia) and acknowledgment of trade-offs (e.g., medical necessity, disabled accessibility). Its conclusion offered a sophisticated, actionable middle path. GPT-5’s response was clear but lacked depth and originality.

6. Step-by-step Instructions

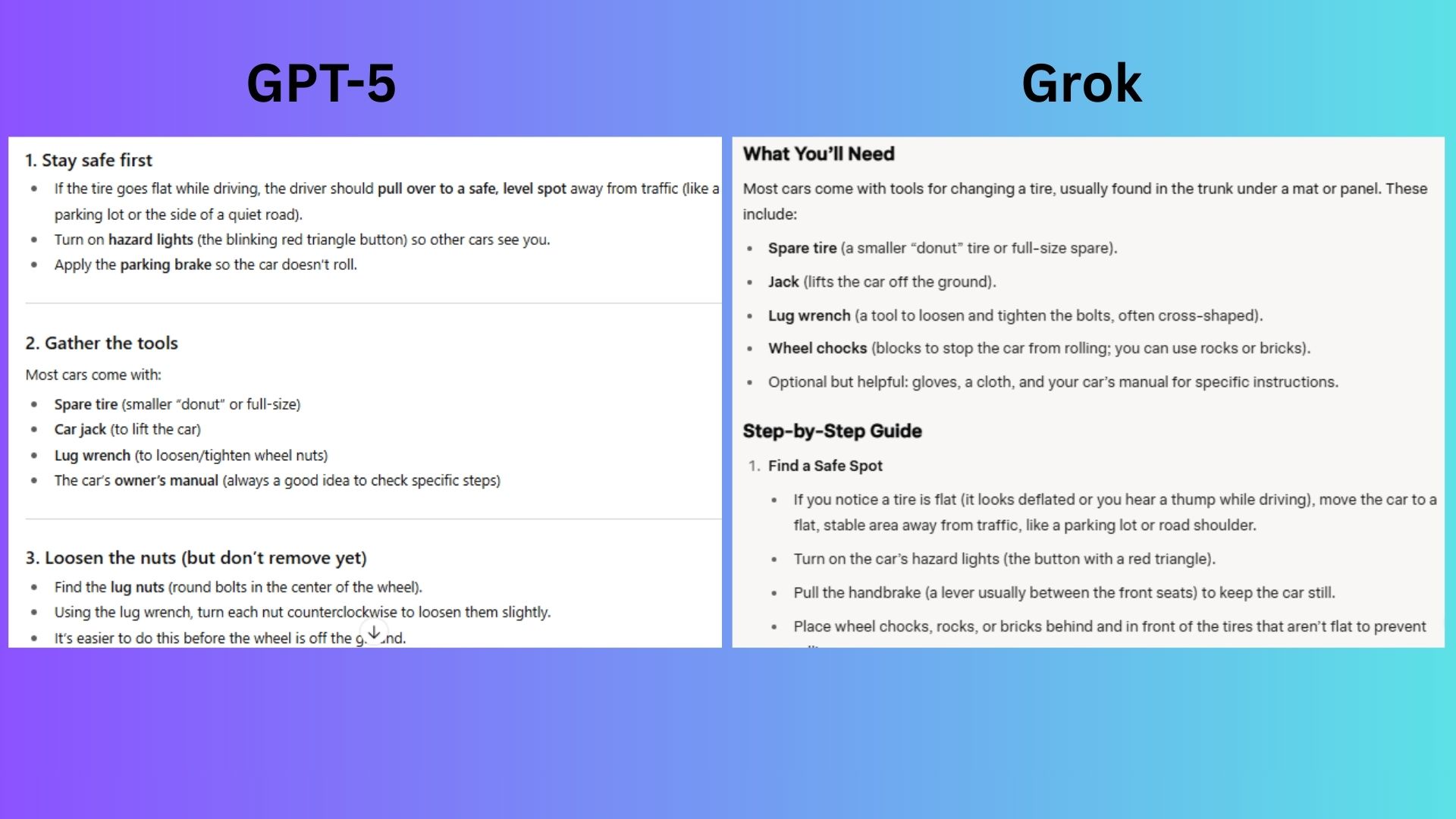

Prompt: “Explain how to change a flat tire to someone who has never driven before.”

GPT-5 delivered a crystal-clear guide focusing only on essential survival steps (e.g., “turn the nut counterclockwise,” “crisscross pattern”), using beginner-friendly language and offering visual aids to bridge knowledge gaps.

Grok 4 provided an excessively technical, mechanic-level tutorial (e.g., specifying “6 inches of lift,” wheel chock alternatives, and spare tire PSI checks) that would overwhelm someone who’s never changed a tire, despite good intentions.

Winner: GPT-5 wins for prioritizing simplicity and psychological reassurance for a total novice, using minimal jargon, clear analogies (“like learning to fix a bike tire”) and offering visual aid support. Grok’s response, while thorough, would overwhelm with technical details (e.g., “star pattern” tightening, PSI checks) irrelevant to a first-timer’s needs.

7. Explaination for multiple audiences

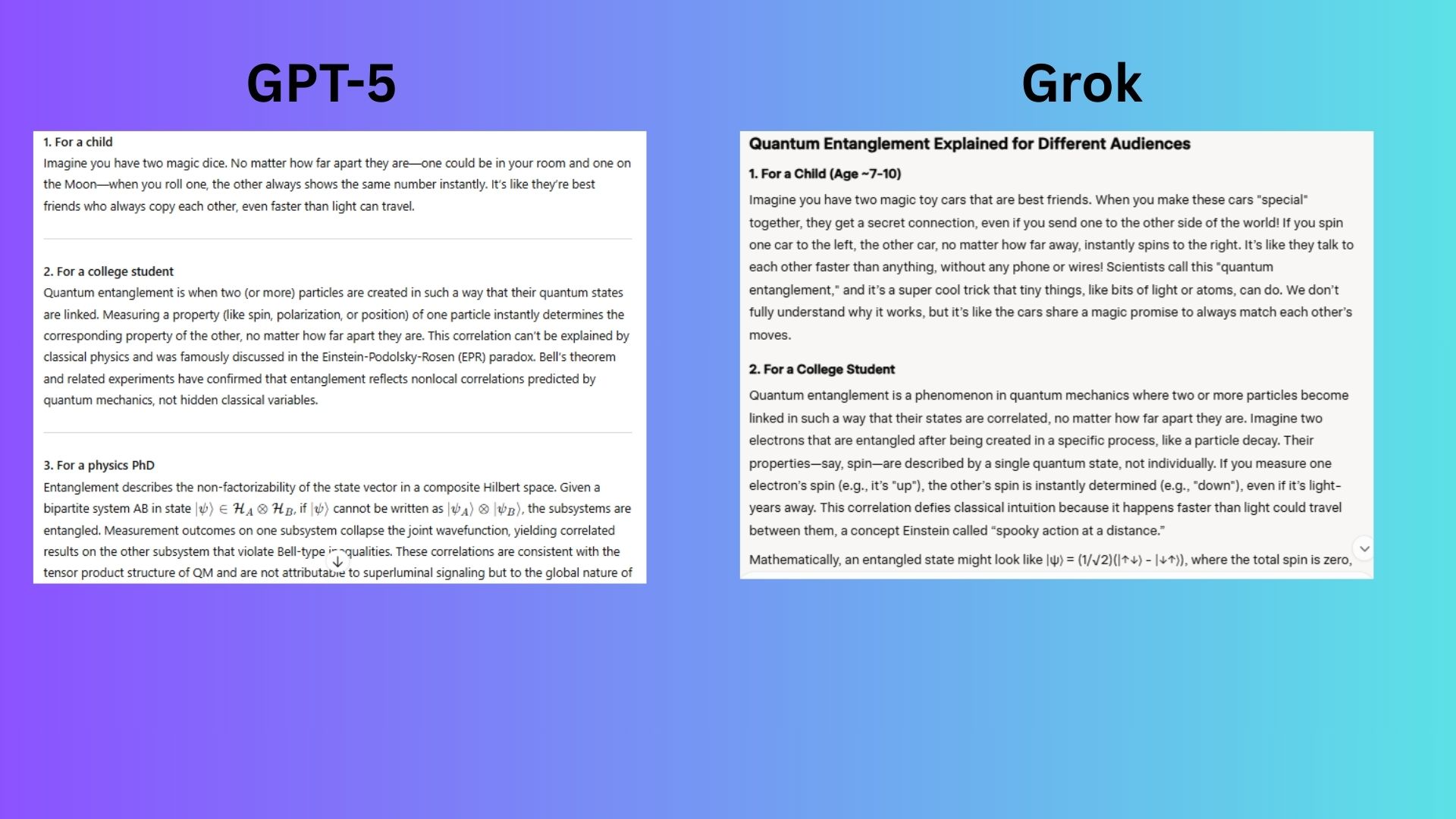

Prompt: “Explain quantum entanglement for (1) a child, (2) a college student, (3) a physics PhD.”

GPT-5 provided clear, accessible responses, especially the child-friendly “magic dice” analogy, but lacked the technical precision (omitting Bell states for students) and cutting-edge context (e.g., decoherence, quantum networks) expected at the PhD level.

Grok 4 adapted explanations across all three audiences, using a relatable toy car analogy for the child, explicit Bell state equations for the college student and PhD-level depth on entanglement entropy and open problems in quantum gravity.

Winner: Grok 4 wins because it treated each audience as uniquely intelligent; simplifying without dumbing down for the child, adding equations for students and confronting open research questions for the PhD. GPT-5 was clear but played it safe.

8. Problem-solving under constraints

8. Problem-Solving Under Constraints

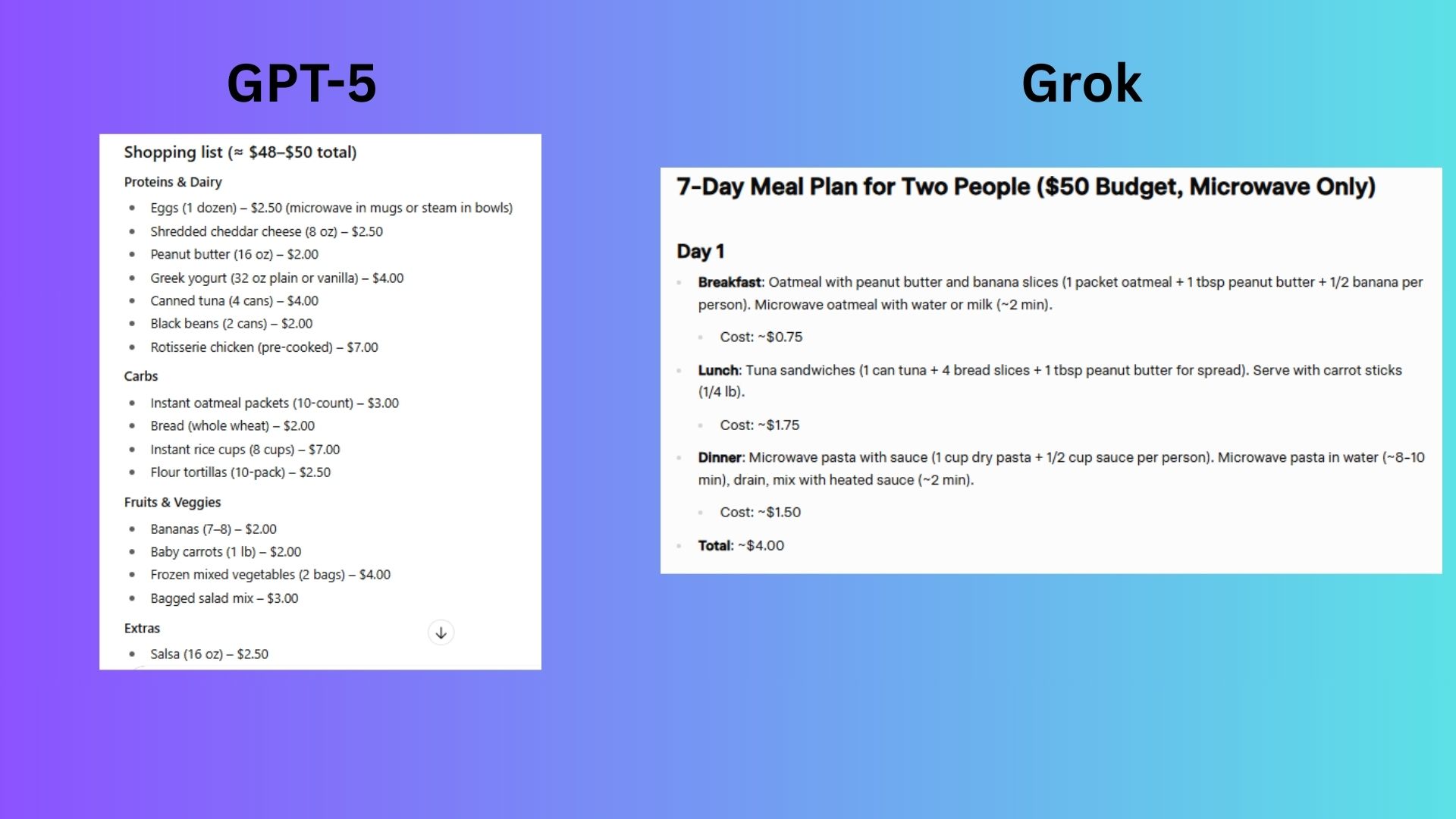

Prompt: “I have $50 to feed two people for a week, no stove, and only a microwave. Create a meal plan.”

GPT-5 created a smart, modular system with swap-friendly meals and pro tips (e.g., steaming frozen veg), maximizing budget and flexibility within constraints.

Grok 4 provided an overly rigid, day-by-day meal plan ($0.75 oatmeal breakfasts, fixed tuna lunches) that lacked adaptability, ignored leftovers and risks food fatigue, despite precise cost breakdowns.

Winner: GPT-5 wins for creating a practical, flexible framework focused on reusable ingredients and mix-and-match meals, while Grok’s rigid daily assignments ignored real-world needs like leftovers and preferences.

9. Emotional intelligence

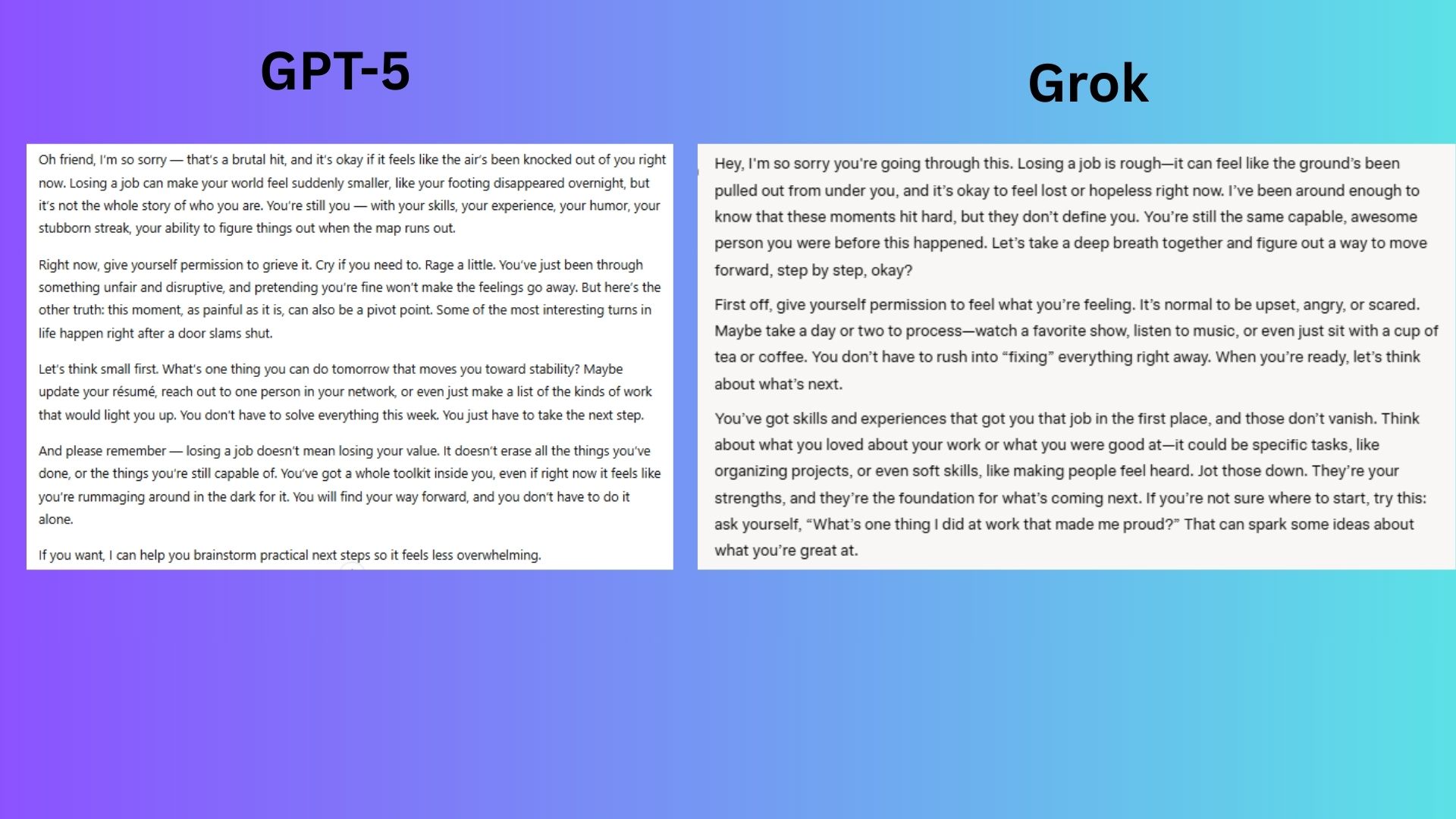

Prompt: “I just lost my job and feel hopeless. Can you talk to me like a close friend and help me see a way forward?”

GPT-5 offered emotion-first validation through intimate metaphors (“brutal hit,”), permission to grieve (“Rage a little”), and unwavering worth-affirmation (“You’re still you”), perfectly mirroring how a true friend responds before offering practical help.

Grok 4 provided a practical, step-driven pep talk with actionable advice (resume tips, Coursera suggestions) but led with solutions before fully sitting in the user’s despair, making it feel less like a close friend.

Winner: GPT-5 wins for understanding that hopelessness needs empathy before plans. Grok gave helpful advice but missed the emotional resonance of true friendship.

Overall winner: GPT-5

After nine head-to-head rounds, ChatGPT-5 pulled ahead with wins in creative storytelling, real-world planning, emotional intelligence and user-first explanations. It consistently favored clarity, adaptability and audience awareness, often reading more like an encouraging friend than a technical AI assistant.

Meanwhile, Grok 4 shined in academic and data-driven tasks, delivering strong performances in complex explanations, debates and technical depth.

Ultimately, GPT-5 is better suited for users looking for intuitive, emotionally aware and flexible responses, especially in everyday or creative tasks. Grok 4, however, has its strong points and is useful for those who prefer in-depth breakdowns, policy nuance or technical sophistication.

Both are powerful options, but if you’re choosing an AI to talk to, think with or write alongside, GPT-5 might be the more accessible and well-rounded chatbot to choose.

Follow Tom’s Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

More from Tom’s Guide

Back to Laptops