Blog

I asked ChatGPT-5 to take me behind the scenes of its brai

Despite the wobbly launch, ChatGPT’s latest model GPT-5 brought with it new features, personalities, and integrations. While these are the more prominent developments, I was curious about whether much has changed about the way ChatGPT handles my prompts.

Straight up asking AI bots like ChatGPT, Claude, and Gemini to reveal their inner monologue or chain of thought triggers their alarms and you’ll get a reply along the lines of: I’m sorry, I can’t share that, but I can give you some general information about how I work instead.

These thoughts could be interesting to see since they could reveal hidden insights such as the AI’s biases. Biases that we already know of include ChatGPT being skewed toward Western views and that it performs best in English, for example. This most likely comes from training data bias.

However, after some back and forth, there were some more details that ChatGPT-5 was willing to share about how it processes my prompts.

1. What GPT-5 looks for in a prompt

(Image: © Tom’s Guide)

While admitting that it couldn’t give me an all-access pass to its brain, ChatGPT said it could create a mock-up human-readable version of what’s going on behind the scenes when it tackles my prompts.

It explained that it starts by interpreting my prompt. It uses this information to activate relevant knowledge domains before going on to build a structured outline of its answer.

It also highlighted important information that helps add more context to a prompt, that have already been proven to work. Specifying who the target audience of the requested information is helps with phrasing the answer in a relevant way, as does suggesting the tone you want.

The GPT-5 also mentioned that any non-negotiables in your prompt should be highlighted early on. If you’re asking for help organizing an event and you have a strict budget, insert it in front of the rest of your text.

If you’re looking for the latest tech to buy but are only interested in new releases, slap the cutoff date at the top of your prompt.

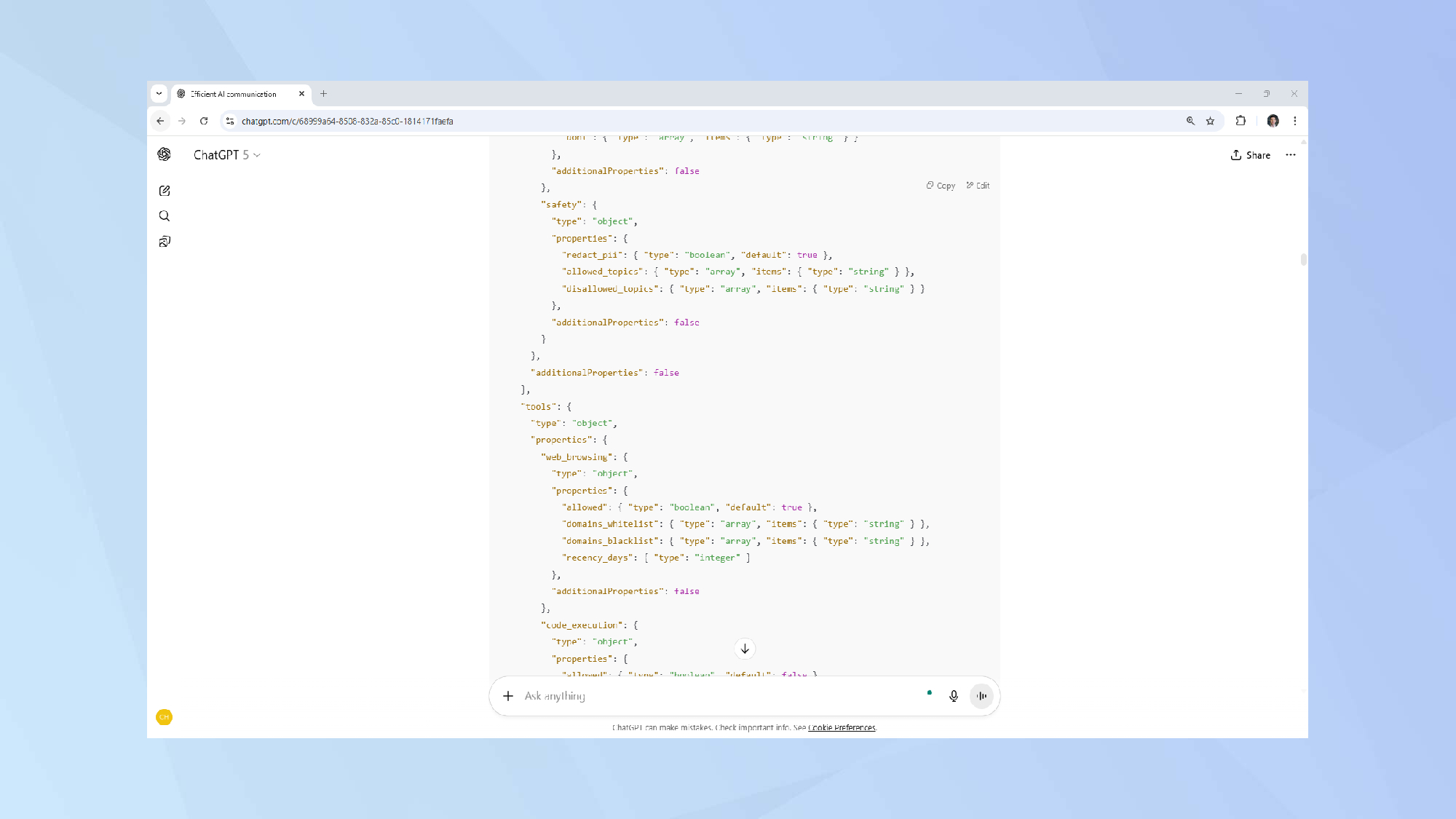

2. Prompt formats

(Image: © Tom’s Guide)

While prompts phrased as though you were speaking to your best friend work well with ChatGPT, the chatbot also revealed that phrasing prompts in the style of a JSON (a specific way of formatting text that resembles code) template could also be an efficient way to communicate when you want to insert multiple strict parameters.

While using such a template may make it harder for you to read through your own prompt, it does have the benefit of potentially making it easier for you to spot if you’ve left any key requirements out of your prompt.

In one section of ChatGPT’s proposed template, you could clarify what websites and topics the chatbot is and isn’t allowed to make use of. Another section dealt with setting the knowledge level for the output and what the maximum word count should be.

To create your own template, use this prompt: “Create a fill-in-ready, universal JSON template that I can use for future ChatGPT prompts.”

If you don’t feel the need to be this granular in your prompts, it’s enough to keep in mind the basic principles and AI tips the template emphasizes. Re-read your prompt and ask yourself if any parts of it can be specified further.

In your next prompt, instead of asking for a summary of a piece of text, ask for a 200-word paragraph instead.

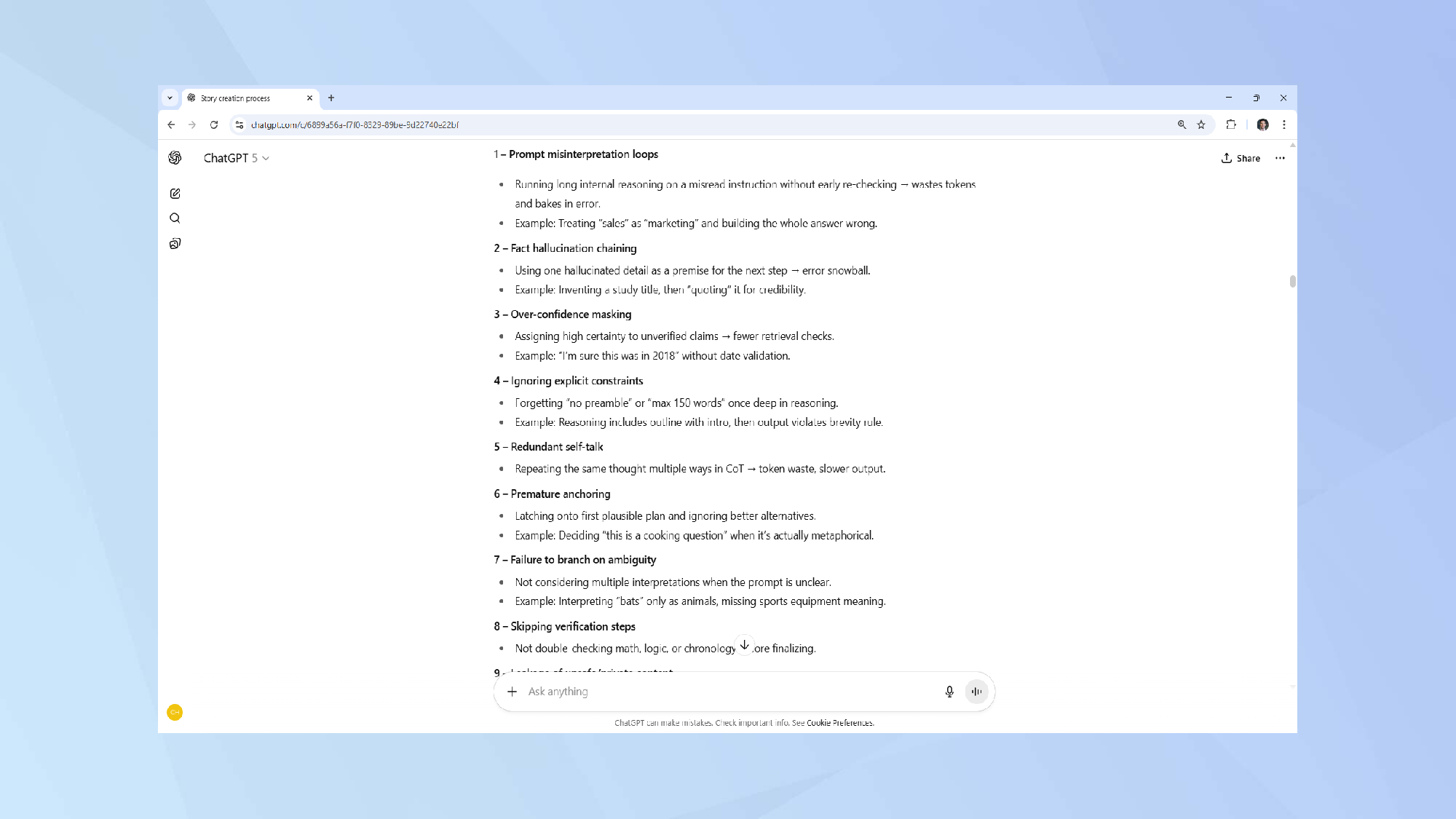

3. What could go wrong?

(Image: © Tom’s Guide)

While asking ChatGPT to reveal its complete chain of thought wouldn’t work, I did gain some insights into what mistakes it could potentially make were it to misinterpret a prompt.

These include the following:

- Misinterpretation loops: Having a long internal dialogue based on a misread instruction without early re-checking, like treating “sales” as “marketing” and building a wrong answer.

- Hallucination chain: Using a hallucinated detail as a premise for the next step, like inventing a study and then ‘quoting’ from it for credibility.

- Premature anchoring: Latching onto the first plausible plan while ignoring better alternatives, such as labeling a prompt as being about cooking when it’s actually a metaphorical example.

- Skipping verification steps: When it does not double-check math, logic, or chronology before submitting its final answer.

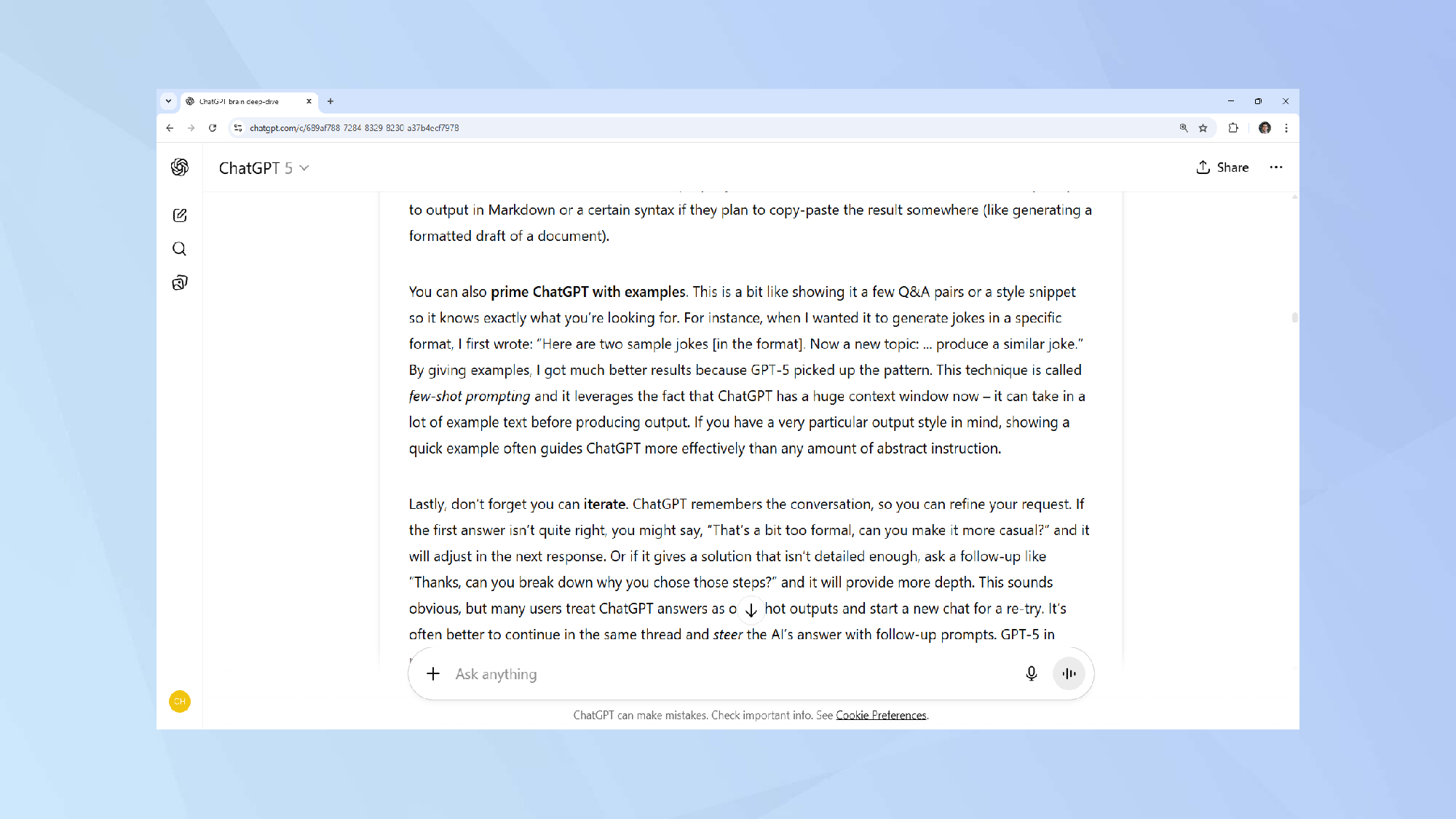

4. Few-shot prompting

(Image: © Tom’s Guide)

While asking ChatGPT to make an exhaustive list of everything it’s considering when answering a prompt, it confirmed that a technique known as few-shot prompting works. This involves sharing examples of what your ideal output looks like.

“If you have a very particular output style in mind, showing a quick example often guides ChatGPT more effectively than any amount of abstract instruction,” ChatGPT explained.

So, if you always reply to emails in the same way, uploading a couple of examples could go a long way in helping ChatGPT craft responses that you’d actually use.

Final thoughts

Understanding how an LLM you’re using is making decisions based on your prompt can be beneficial in various ways. It can help you understand what might be going wrong if the outputs aren’t what you were hoping for and could potentially lead to unlocking restraints if you have a legitimate reason for doing so.

It’s also useful for the creators of the models themselves to supervise them to ensure they’re behaving correctly. A new study has recently flagged that newer LLMs may become so advanced that their chain of thought could become unreadable to humans, or, can be disguised by the AI if it detects it is being supervised.

Follow Tom’s Guide on Google News to get our up-to-date news, how-tos, and reviews in your feeds. Make sure to click the Follow button.

Back to Laptops